The birth of automobiles has brought convenience to people’s lives. In recent years, with the continuous expansion and deepening of the application of artificial intelligence technology, autonomous driving has gradually entered the public’s field of vision. It seems that driving has become an easy task. In the research and development of autonomous driving technology, choosing whether to use lidar or camera as the main sensor is the first problem to be solved. They represent two completely different systems-laser SLAM and visual SLAM.

Vehicle cameras are the basis for many early warning and recognition ADAS (advanced driver assistance systems) functions. Among the many ADAS functions, the visual imaging system is more basic and more important for drivers. It is intuitive, and the camera is the basis of the visual image processing system.

Lane Departure Warning, Forward Collision Warning, Traffic Sign Recognition, Pedestrian Collision Warning, Driver Fatigue Warning and many other functions can be realized by the camera, and even some functions can only be realized by Camera implementation. In addition, cameras can not only capture images with high resolution, but can also classify objects better, so what are their disadvantages? "The data depth of cameras is not as deep as lidar." Magney said.

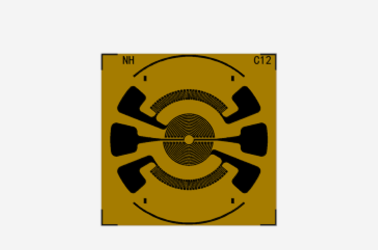

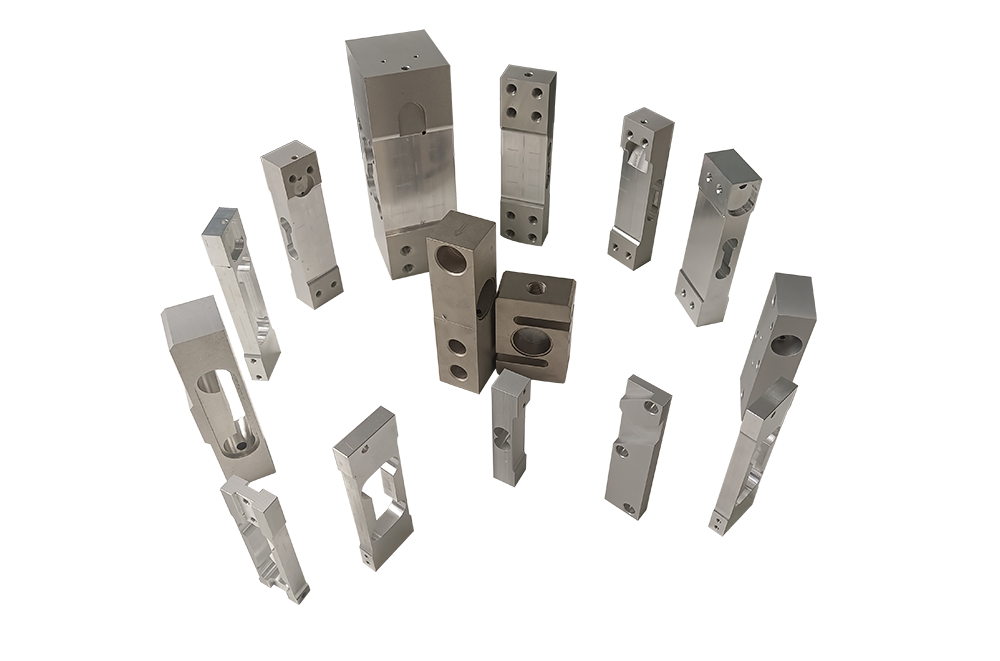

With the popularity of autonomous driving, lidar has become more popular than ever. LiDAR (LiDAR-Light Detection And Ranging) is a combination of laser, global positioning system GPS and inertial measurement device. It can distinguish between pedestrians and posters in real movement, model in a three-dimensional space, detect static objects, and accurately measure distances. Its working principle is also well understood. The radar system that emits laser beams to detect the target position, velocity and other characteristic quantities has the advantages of high measurement accuracy and accurate direction.

In the ADAS system, the lidar obtains characteristic data such as the position of the target object and the moving speed based on the TOF time-of-flight principle through the lens, laser transmitting and receiving device, and transmits it to the data Processor; At the same time, characteristic data such as the speed, acceleration, and direction of the car will also be transmitted to the data processor through the CAN bus.

Then, the data processor comprehensively processes the information and data of the target object and the car itself, and issues corresponding passive warning instructions or active control instructions based on the processing results, thereby realizing assisted driving Features. The mainstream lidar in the market or in autonomous driving projects now accounts for about 90% of the applications in autonomous driving projects. The driverless cars developed by companies such as Google, Audi, Ford and Baidu basically use lidar. In addition, according to the number of wire beams, lidar can be divided into single-beam lidar and multi-beam lidar. Single-beam laser is mainly used to avoid obstacles, and it is very accurate in testing the distance and accuracy of surrounding obstacles. However, single-beam can only scan in plane and cannot measure the height of objects; multi-beam laser radar has made up for the shortcomings of single-beam laser radar , There has been a qualitative change in dimensionality improvement and scene restoration, and the height information of objects can be recognized.

It is understood that there are currently 4 lines, 8 lines, 16 lines, 32 lines and 64 lines launched internationally. Multi-line lidars are mainly used in automotive radar imaging. .

The sensor used for driverless control is either a camera or a lidar. This is a consensus in the industry, but which one to use is still under debate. Some views believe that there is no such opposition between the two. The two technologies have their own advantages and disadvantages. Complementing each other is a relatively achievable route at this stage. From a deeper perspective, the choice of sensors is actually a matter of route selection for autonomous driving. If autopilot is personified, then lidar is basically unnecessary. The vehicle can complete dynamic judgment of the surrounding environment and vehicle control by relying on its own knowledge base and rule library.

Some people believe that the problem between the two is which one is more focused in the actual industry development. Take Tesla, for example, which focuses on cameras and millimeter wave sensors. However, due to the frequent occurrence of Tesla accidents, more and more companies have begun to question this relatively low-cost and easy-to-promote method. In addition, the media reported that they witnessed Tesla's start of testing model series equipped with lidar in North America. Autonomous driving is a system engineering. Sensors are indispensable and cannot work independently. Perfect cooperation based on reality can make autonomous driving go as fast and far as possible.